Successful data strategies are built on efficiency, transparency and utility

If you missed part one: The Narrative Manifesto - Achieving the Promise of the Data Economy.

Data Markets Need to be Efficient

Executing a Data Strategy Should Be Easy

Friction is enemy #1 to any emerging market. Data, being a complex asset used in countless ways, is especially susceptible to market friction. As this market continues to mature, every effort must be taken to eliminate inefficiency through standardization, automation, best practices, and common frameworks that can be used to simplify how companies interact. The challenge is while ease of use is critical – we must be vigilant not to over-simplify data to the point where we’ve removed its utility and specificity.

Today’s Data Markets are Inefficient

The efficient-market hypothesis states that asset prices fully reflect all available information making it impossible to “beat the market” consistently since market prices should only react to new information. Today’s markets are inefficient because the operators of those markets are creating information deserts through opacity.

The Democratization of Data Creates Shared Value

We are firm believers that competition drives innovation. One of the existential threats to this thesis as it applies to data is that there has been a trend for data to be locked into silos managed by quasi-monopolistic companies. Data will not fulfill its innovation promise if not broadly available.

We Need to Eliminate Bad Actors

Regulations + Best Practices Will Create a More Sustained Ecosystem

Historically regulators have struggled to keep up with the pace of innovation in data and technology, but they are starting to catch up. Frameworks like GDPR, HIPAA, and the CCPA have been enacted to make sure organizations respect consumer privacy and have codified many best practices that all organizations need to follow. We believe that this will be a good thing for data dependent organizations. Trust amongst all of the participants in the data economy is a requirement for it to reach its full potential.

Narrative is Acting on these Beliefs

We started Narrative nearly three years ago because we understood the opportunity that data provides. We have held these fundamental beliefs from day one and we’ve been working to provide software solutions that allow companies to better capture that potential. Today we have over fifty companies using our products, but the journey has just begun. In the coming months, we’ll be rolling out many features that will continue to empower participants in the data economy – both those trying to monetize data and those focusing on data acquisition – to create better outcomes.

Data Needs to be Useful and Specific

Data alone is not a strategy

It might seem obvious that data needs to be useful in order for it to have any value, but as in any emerging market, we’ve seen organizations that want to check a box without fully understanding what they are getting. Checking the box is not a long term strategy and we’re already seeing companies that are trying to measure the value of their data strategies. Unfortunately, there are market and technological realities that make that measurement difficult. At Narrative we’re working on solving those challenges by reimagining how the data economy operates.

Definitions

Specific

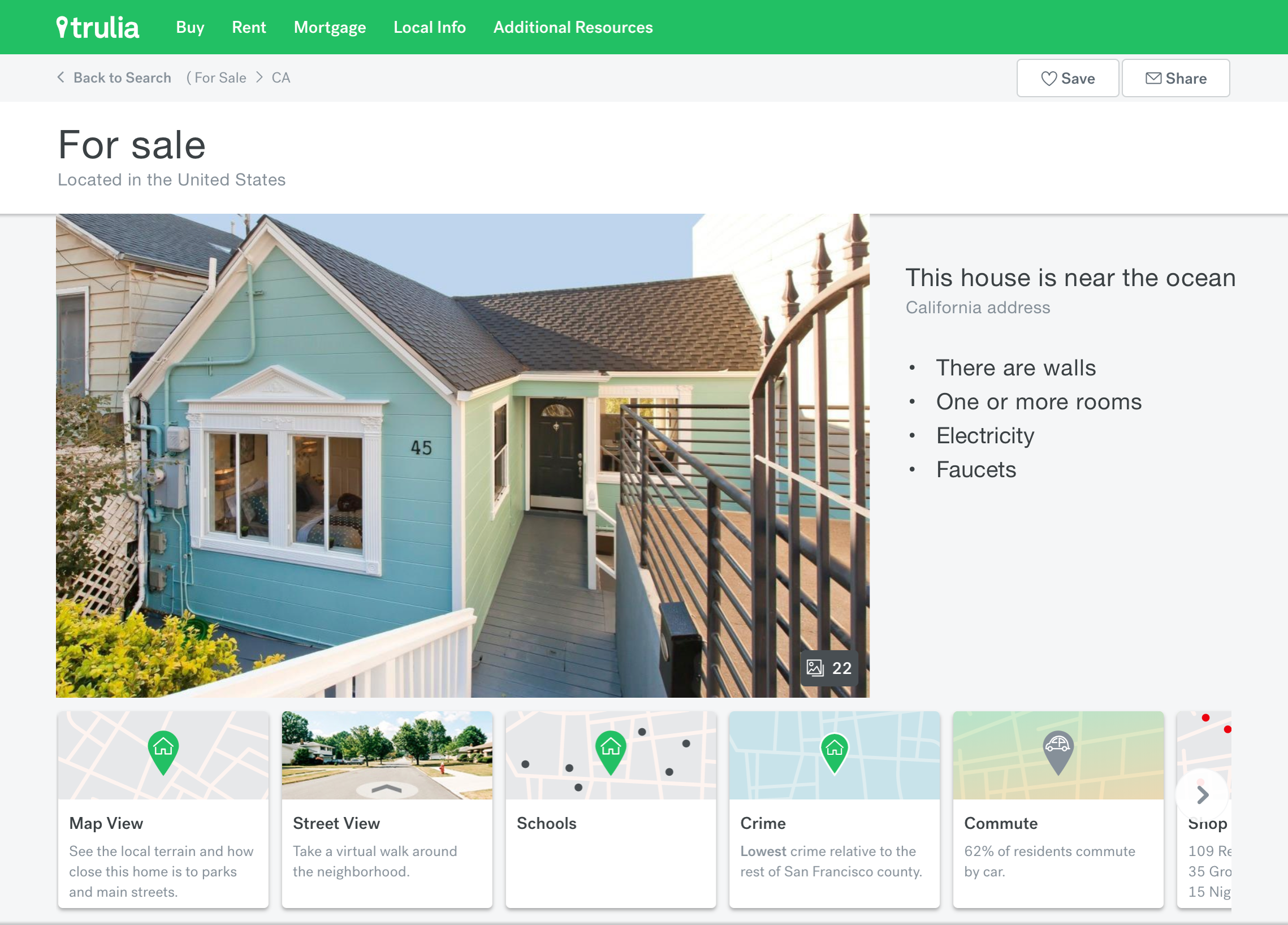

When we talk about data specificity we’re really talking about the amount of information a given piece of data can encode. Stating that a house is big is less specific than saying it is 6000 square feet. Specificity is directly correlated with utility in that the more information that can be encoded the more likely there is useful information. That being said, specificity alone does not ensure utility.

Useful

Whereas specificity is universal – data is either specific or it is not – data utility has to be defined in the context of the user. A data point may be useful for one task, but that same data point might not be useful for another task. One way to define data utility is in the context of signal vs. noise – in fact, the signal-to-noise ratio is sometimes used metaphorically to refer to the ratio of useful information to false or irrelevant data. Going back to our house analogy. You may know that the house is painted white and it has a three-foot tall picket fence, both very specific, but not very useful if your primary concern is how many square feet it is or if it had enough bedrooms and bathrooms to comfortably fit your family.

The measure of utility will depend on how the data is being used. In the context of machine learning, it may be defined as how the data improves the predictive outcomes of the model. In the context of digital advertising, it may be measured by an increase in propensity to buy a particular product.

Foundational Concepts

One of our focuses at Narrative is to create software that maximizes the potential for data being specific and useful. In order to do that we have focused on a handful of foundational concepts in all of our products.

A Focus on Raw Data

A common mistake we see is that organizations, in an effort to simplify things, will aggregate their data assets. By definition, this is making the data less specific. It isn’t that data can’t be useful when aggregated, but aggregations can’t be undone. Plainly said, you can’t un-bake the cake.

At Narrative our focus has always been on raw data – data that has gone through no aggregation.

Fine-Grained Controls

Raw data ensures that we retain specificity, but it doesn’t mean that the data will be useful. As we defined above, utility is in the eye of the beholder. A valuable piece of data for one person might be noise to another. This creates a situation where there isn’t a one size fits all solution. Instead, we needed to create a solution that allowed for bespoke data executions.

We accomplished this by implementing a set of fine-grained controls by which our customers can package and select data. For example, if someone is creating a predictive model that is focused solely on citizens of the United States they can easily filter records to those indicating that they originated in the US. If a given record doesn’t specify – and thus isn’t specific enough – where it originated, it gets filtered. If a record is specific but originates outside of the US, it gets filtered.

We’ve created a solution that ensures that our customers will only access data that is both specific and useful. While the country example is trivial, these filters apply across dozens of different attributes and can act as the first line of defense in increasing the signal-to-noise ratio.

Permissioned and Compliant

As a compliment to the fine-grained controls provided by our products, Narrative has placed compliance, privacy, permissioning, security, and trust at the center of our product. These features come in a number of forms, but we firmly believe that in order to build a more sustainable data economy these are core principles and not secondary issues to be treated as annoyances.

Summary

In creating a product that sticks to the broad principles outlined here, we’ve made our partners lives easier and give them more power to re-envision their data strategy. Our work has only just begun and we’re excited to show off some of the new features we’ve been working on in the coming months.